Dmitry Gudkov has written a very nice post attempting to explain what make QlikView so special: http://bi-review.blogspot.fi/2012/05/really-is-qlikview-bi-tool.html?m=1

Make sure to also follow the links in the article for more interesting insight.

Dmitry Gudkov has written a very nice post attempting to explain what make QlikView so special: http://bi-review.blogspot.fi/2012/05/really-is-qlikview-bi-tool.html?m=1

Make sure to also follow the links in the article for more interesting insight.

It is quite common that WCF has problems working with old and none-.NET web services. Usually the old “web reference” (ASMX) tehcnology works better in this kind of situtations, but this one time I was determined to solve the challange using WCF.

After having Visual Studio generate me the client classes I did a unit test to see if I could call the web service successfully. It turned out that the call succeeded and the function returned a response. Unfortunately the response object only contained the result code and descrption, but the data property was null.

Usually in this kind of situations I first turn to Wireshark or some similiar network traffic packer analyzer to see what’s actually gets sent and returned. This this time I had to come up with an alternative way as the web service only allowed me to use a secure HTTPS address so all the traffic was encrypted. As the calls returned a valid object with part of the expected data I knew the authentication was working and there was nothing wrong with the message headers. This meant it was enough for me to see the message content and writing this simple message inspector worked as the solution.

public class SoapMessageInspector : IClientMessageInspector, IEndpointBehavior

{

public string LastRequest { get; private set; }

public string LastResponse { get; private set; }

#region IClientMessageInspector Members

public void AfterReceiveReply(ref System.ServiceModel.Channels.Message reply, object correlationState)

{

LastResponse = reply.ToString();

}

public object BeforeSendRequest(ref System.ServiceModel.Channels.Message request, IClientChannel channel)

{

LastRequest = request.ToString();

return null;

}

#endregion

#region IEndpointBehavior Members

public void ApplyClientBehavior(ServiceEndpoint endpoint, ClientRuntime clientRuntime)

{

clientRuntime.MessageInspectors.Add(this);

}

public void AddBindingParameters(ServiceEndpoint endpoint, System.ServiceModel.Channels.BindingParameterCollection bindingParameters) { }

public void ApplyDispatchBehavior(ServiceEndpoint endpoint, EndpointDispatcher endpointDispatcher) { }

public void Validate(ServiceEndpoint endpoint) { }

#endregion

}

Usage

inspector = new SoapMessageInspector();

factory.Endpoint.Behaviors.Add(inspector);

The message inspector revealed to me that the call was sent ok and also the data returned by the server was fine. It was the WCF framework that failed to properly deserialize the response.

The property for the result data was called Any in the response class, so I took a look at the WSDL provided by the server.

<s:complexType name="response"> <s:sequence> <s:element type="s0:statusType" name="status" maxOccurs="1" minOccurs="1"/> <s:any maxOccurs="unbounded" minOccurs="0" processContents="skip" namespace="targetNamespace"/> </s:sequence> </s:complexType>

The any WSDL element leaves the structure of the content undefined. WCF translates this to a Any property of type XmlElment. The reason why WCF could not process the response correctly was pobably caused by this and the minOccurs value.

After trying to edit some of the attributes of the generated classes without success, I decided to take over the parsing of the response using a custom response formater.

public class MyResponseBehaviour : IOperationBehavior

{

public void ApplyClientBehavior(OperationDescription operationDescription, ClientOperation clientOperation)

{

clientOperation.Formatter = new MyResponseFormatter(clientOperation.Formatter);

}

public void AddBindingParameters(OperationDescription operationDescription, System.ServiceModel.Channels.BindingParameterCollection bindingParameters) { }

public void ApplyDispatchBehavior(OperationDescription operationDescription, DispatchOperation dispatchOperation) { }

public void Validate(OperationDescription operationDescription) { }

}

public class MyResponseFormatter : IClientMessageFormatter

{

private const string XmlNameSpace = "http://www.eroom.com/eRoomXML/2003/700";

private IClientMessageFormatter _InnerFormatter;

public eRoomResponseFormatter(IClientMessageFormatter innerFormatter)

{

_InnerFormatter = innerFormatter;

}

#region IClientMessageFormatter Members

public object DeserializeReply( System.ServiceModel.Channels.Message message, object[] parameters )

{

XPathDocument document = new XPathDocument(message.GetReaderAtBodyContents());

XPathNavigator navigator = document.CreateNavigator();

XmlNamespaceManager manager = new XmlNamespaceManager(navigator.NameTable);

manager.AddNamespace("er", XmlNameSpace);

if (navigator.MoveToFollowing("response", XmlNameSpace))

{

ExecuteXMLCommandResponse commandResponse = new ExecuteXMLCommandResponse();

// and some XPath calls...

return commandResponse;

}

else

{

throw new NotSupportedException("Failed to parse response");

}

}

public System.ServiceModel.Channels.Message SerializeRequest( System.ServiceModel.Channels.MessageVersion messageVersion, object[] parameters )

{

return _InnerFormatter.SerializeRequest( messageVersion, parameters );

}

#endregion

}

As the web service only provided one function returning a fairly simple response object writing the formater only required a couple of lines of code to parse

the response data using XPath. As soon as I had replaced the default formatter with my own, things started working perfectly.

factory.Endpoint.Contract.Operations.Find("ExecuteXMLCommand").Behaviors.Add(new MyResponseBehaviour());

I found out later that the message inspector I had written earlier also provided me with a way to throw exceptions with meaningful messages as the server always included a error descrption in the SOAP error envelope that WCF did not reveal.

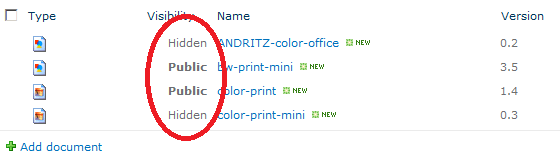

In one of my projects I wanted to allow teams to work privately with their documents but also allow the rest of the organization to be able to access everything considered final. The two level versioning feature allowed me easily to do exactly this, but the challenge was to also find a way to encourage the teams to finally “publish” their items. The solution was to have a special column indicate the state of the item.

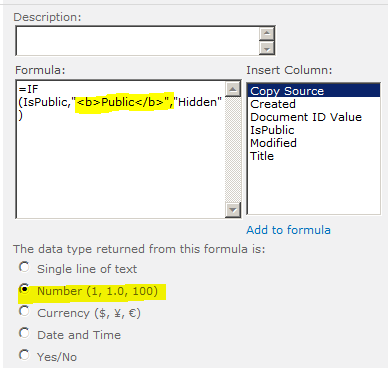

To make things more interesting, I decided to only use the dialogs of SharePoint designer and the web interface to accomplish this. The easiest way to insert HTML into a list is by using a calculated column. Unfortunately, as I quite quickly found out, the calculated columns do not update on version and approval changes. The only workaround was to create workflow to react on these changes and then use the calculated column to generate the output.

This is what the list column setup looked like

The IsPublic column gets updated by the workflow and the Visibility column generates the HTML based on the value. There are many posts describing how to use a calculated column together with JavaScript to print out HTML, but all you actually need is to set the result type of the column to integer!

Using SharePoint designer I created the following workflow for the list

The problem with document libraries is that the file might not have been completely uploaded before the workflow kicks in. This is why the workflow needs to first make sure it can access the item before editing it.

There are probably many ways to check if there is a major version available, I decided to convert the version number (which is actually a string) to an int and see if it is higher than 0. After some trial and error I found out that the only way to convert a string representing a decimal (yes, the version column is a text field) value is by first converting it to a double.

The final task was to hide the IsPublic field from the list forms and find some nice icons to indicate the visibility.

Some while ago I was asked by the CIO of my company to give a report on the Microsoft BI tool set. While gathering information I constantly found QlikView and Tableau being named as the new top dogs. Having used QlikView for a couple of years now I got interested to know more about the rival.

Just by reading blog posts and articles it already became clear Tableau fits into the category of data analysis tools. QlikView emphasizes on data discovery, so would it be fair to compare them against the same criteriors?

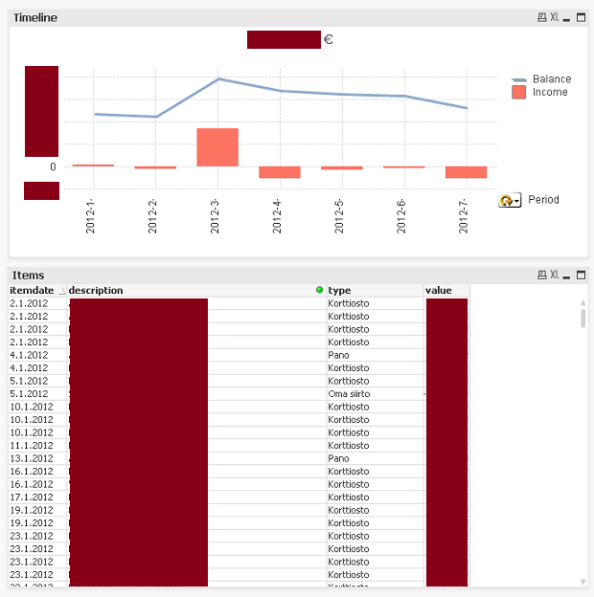

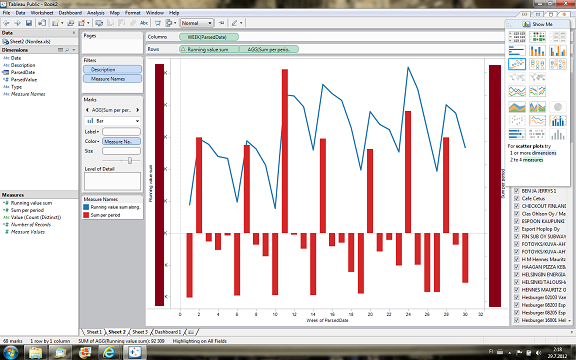

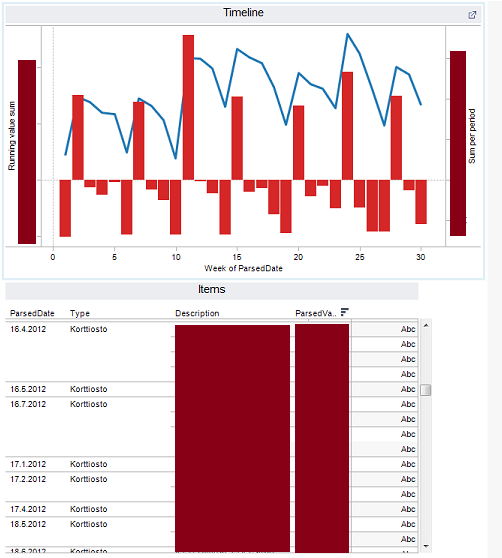

Planning to buy me a new car I needed to analyse the balance history of my bank account. This gave me a perfect scenario to try to implement using both tools utilizing the data I already had ready in a Excel workbook.

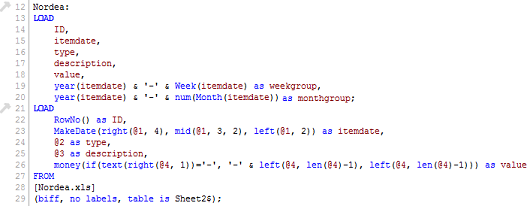

In QlikView the building of a solution always starts by configuring the load script defining how the data will get imported. QlikView is never directly connected to a data source, instead the information is always first imported, processed and compressed.

I had to add two data transformations: convert the date information to a correct date format and to change the numbers with a trailing negative or positive sign to the more traditional format. To help with displaying the values on a monthly and weekly timeline I also had the script generate some additional information.

When I was ready with the importing, setting up the chart and table objects was easy. As I only had data from the beginning of this year I configured the accumulation “manually” by defining it in the chart expression enabling me to include the initial balance.

The final result looked nice and as QlikView by default already supports filtering by clicking on any visible information I did not see a need for any additional filter boxes.

My plan was to use Tableau Desktop but as my trial license had expired I had to turn to Tableau Public that is offered free for publishing dasboards online.

Configuring my Excel files as a data source was easy using the inbuilt wizard. Unike QlikView Tableau links in general directly with the data source and the transformations are processed on the fly.

As the data required some tansformation as desrcibed earlier, I created two additional calculated dimension fields for this. The script language was quite close to what I used in QlikView so I could copy-paste most of what I had earlier written.

Creating the list was easy and done by drag and drop just like when working with pivots in Excel. Configuring the chart was more of a challange as I needed to create some additional calculated measure fields to get the same result I had in my QlikView solution. In contrast to QlikView, I did not have to create any week and month fields has Tableau could do the groupping directly using the available date field by selecting the desired option from the menu.

In Tableau the visual elements are defined in worksheets and these are then composed on a dasboard. Setting up the dasboard was easy and done by placing the previsouly created worksheets onto a grid. As there is no standard drill down and filtering available the presentation ended up being quite static .

Using QlikView, writing the import script and configuring the objects on the canvas requires some degree of knowledge but it also allows the user to setup complex data handling and having full freedom to define the visual appearance of the solution. QlikView enables not only setting up dashboards but also has potential as a platform for creating complete BI tools.

Despite the fact that I did not spend any time on reading manuals or watching video tutorials before getting started with Tableauwas easy. Tableau also offered much of the functionality through menu options that required scripting in QlikView. Unfortunately when working with dashboards what you see is what you get, leaving less room for discovery.

I would imagine Tableau is closer to Microsoft PowerPivot in its user experience and approach than to QlikView. As there tools are of different nature, only by comparing them against specific requiremets will yeld in a truthful conclution.

NTFS has for a long time supported the concept of alternate data streams. The idea is that you can store data under a file, not by inserting or appending into it but more like tagging it with data. Even if the feature is not widely know, it is used by Microsoft in many places e.g. for storing the cached thumb nail images under each thumbs.db file and for marking downloaded files as blocked.

Not all shell commands support the feature, but this example should give you the idea:

echo "hello world" > test.txt

echo "hello you" > test.txt:hidden.txt

notepad test.txt:hidden.txt

dir *.txt

The first line just creates the parent for the alternate data stream created by the second command (<file or directory>:<stream name>). Note that the last dir command only shows the parent file.

Note that if you move a file, the alternative data streams will only follow as long as the destination device also uses NTFS.

You can work with alternate data streams in C#, but only by using the Windows API as none of the standard .NET components support it directly. I made the following class to easily access the file handle (open/create) for creating and modifying an alternative data stream:

public static class AlternateDataStreams

{

private const uint FILE_ATTRIBUTE_NORMAL = 0x80;

private const uint GENERIC_ALL = 0x10000000;

private const uint FILE_SHARE_READ = 0x00000001;

private const uint OPEN_ALWAYS = 4;

public static SafeFileHandle GetHandle(string path, string name)

{

if (string.IsNullOrEmpty(path))

throw new ArgumentException("Invalid path", "path");

if (string.IsNullOrEmpty(name))

throw new ArgumentException("Invalid name", "name");

string streamPath = path + ":" + name;

SafeFileHandle handle = CreateFile(streamPath,

GENERIC_ALL, FILE_SHARE_READ, IntPtr.Zero, OPEN_ALWAYS,

FILE_ATTRIBUTE_NORMAL, IntPtr.Zero);

if (handle.IsInvalid)

Marshal.ThrowExceptionForHR(Marshal.GetHRForLastWin32Error());

return handle;

}

[DllImport("kernel32.dll", SetLastError = true, CharSet = CharSet.Unicode)]

static extern SafeFileHandle CreateFile(string lpFileName, uint dwDesiredAccess,

uint dwShareMode, IntPtr lpSecurityAttributes, uint dwCreationDisposition,

uint dwFlagsAndAttributes, IntPtr hTemplateFile);

}

The handle can be passed to a FileStream for reading/writing.

I’m planning to use this for marking files in a way enabling my application to detect changes even if the file is left fully accessible to the users. I plan to achieve this by storing the file path+file hash data as a encrypted alternative data stream.

Migrating old Visual Basic 6 applications to work on Windows 7 can be hard work. Microsoft gives a “it just works” guarantee for the old run-time but not the common and custom control packages heavily used in the past.

The Visual Basic 6 common controls package including most of the old libraries is available for download from Microsoft, but it could not get it to install on my Windows 7. Many of the libraries are also available for download on various download sites, but these files cannot be fully trusted.

I took a closer look at my Visual Basic 6 IDE installation running on one of my virtual machines and noticed that many of the libraries had a dep file by the same name. The dep files are Visual Basic setup wizard dependency files used by the old installers to point the location from where the library could be downloaded. All of these dep files pointed to the following URL:

http://activex.microsoft.com/controls/vb6/

For my surprise the URL still worked! Using the address one can download any on the old libraries simply by adding the library name and the cab extension to the address, e.g. to retrieve the library dblist32.ocx I used the URL http://activex.microsoft.com/controls/vb6/dblist32.cab. All the common libraries like comdlg32.ocx and dblist32.ocx are there! The server returns a cab file containing the ocx file and an inf file. After downloading all you need to do is to extract the ocx file, put it in your windows\system32 directory and register it using regsvr32.

Unfortunately the more you play around with the old libraries the quicker you face the old dll hell. The ocx libraries often depend on other ocx and dll files that then in turn depend on even more files. None of these dependencies are included in the cab file returned by the server, so to find out what additional files you need, do the following:

If you need to migrate even older applications starting from VB3, you can start by downloading the old run-times from here.

It’s been a while since I worked with Java and especially JDBC. Today I spent a while trying to get the Oracle BI Publisher to connect to a SQL Server 2005 instance.

I found various examples of different ways to define the instance name in the connection string. After trying them all I could only conclude that defining the instance specific port number is the only way to get a connection established.

jdbc:hyperion:sqlserver://<server>:<port>;DatabaseName=<database>...

Reading the MSDN documentation a bit closer also revealed that it is the recommended way.

How do I know what the instance specific port number is? Easy!

The port number needed is the item named TCP Dynamic Ports.

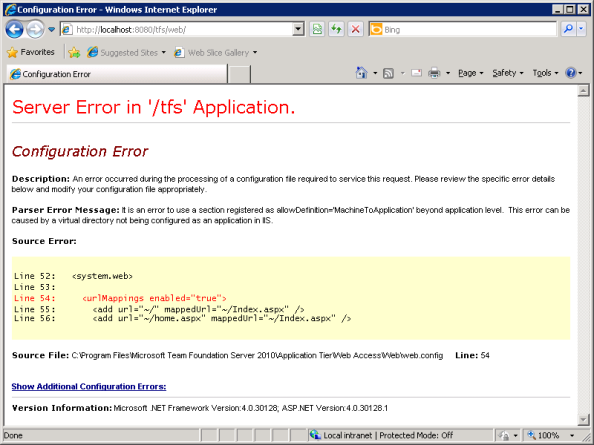

Setting up Team Foundation Server 2010 RC was easy, especially when selecting the Basic profile as it does not require you to setup SharePoint and the SQL Server Reporting Services. Everything on the Visual Studio and source control side worked well.

But when I fired up my browser the have a look at the Web Access now a partof the standards TFS, IIS prompted me with the following error:

I was not sure if the Basic profile included the Web Access as I could not find any info on it on the web. The IIS diagnostic tools suggested the server had problems accessing the folder so I tried all sorts of things with the security settings. By googling I found some solutions for older IIS versions, but I didn’t want to do anything that would e.g. prevent me from updating my TFS setup later.

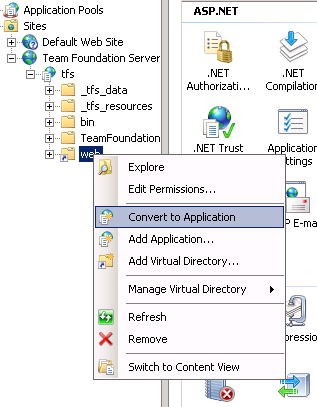

Finally I added a bug report to Microsoft Connect and as the solution they suggested was the same I had found earlier, so I tried it.

For some reason the installation of the web access web application had gone wrong. All I needed to do to fix the problem was to convert the web folder into an application:

Seconds later I could access projects using the browser!

Unfortunately my problems with the Web Access did not end there. At the moment I’m unable to create child work items using the web access interface:

Again, I’ve made a bug report on it. I will edit this post when I find a solution. If you are having any of these problems, give my bug reports your vote!

Working with SQL Server CE 3.5 I noticed that it does not support the ISNULL function. Instead you have to use the COALESCE function to achive the same thing.

The coalesce function can be used much in the same way as the isnull function. The coalesce function returns the first none-null value in the parameter list.

This will give you all the rows where some_id_field is not null and equals the @filtering_id parameter:

SELECT some_value WHERE COALESCE(some_id_field, -1) = @filtering_id;

When writing a custom user control you might at some point be tempted to use it as an item container. If you then try to name the nested control you will get the following error:

Cannot set Name attribute value 'myControl' on element 'SomeControl'. 'SomeControl' is under the scope of element 'ContentPanel', which already had a name registered when it was defined in another scope.

This is unfortunately a common problem caused solely by the XAML parser.

The easiest solution to overcome this is by constructing the control yourself. I did this by defining my XAML in a resource dictionary and then writing the lines required to load it at runtime. The only downside in this approach is that no designer preview is available.

Here are the 3 easy steps to write a simple container with a title on top:

1. Add a new class (ContentPanel.cs) and a Resource Dictionary (ContentPanel.xaml) to your WPF project.

2. Then write your XAML into the dictionary defining the style for your control:

<ResourceDictionaryxmlns="http://schemas.microsoft.com/winfx/2006/xaml/presentation"xmlns:x="http://schemas.microsoft.com/winfx/2006/xaml"xmlns:local="clr-namespace:MyNamespace"><Style TargetType="{x:Type local:ContentPanel}"><Setter Property="Template"><Setter.Value><ControlTemplate TargetType="{x:Type local:ContentPanel}"><Grid Background="Transparent"><Grid.RowDefinitions><RowDefinition Height="18"/><RowDefinition Height="*" /></Grid.RowDefinitions><Border Grid.Row="0" Background="#FF6C79A2" CornerRadius="4 4 0 0" Padding="5 3 5 3"><TextBlock VerticalAlignment="Center" Foreground="White" Text="{Binding Path=Title, RelativeSource={RelativeSource FindAncestor, AncestorType={x:Type local:ContentPanel}}}"/></Border><ContentPresenter Grid.Row="1"/></Grid></ControlTemplate></Setter.Value></Setter></Style></ResourceDictionary>

public class ContentPanel: UserControl

{

public static readonly DependencyProperty TitleProperty =

DependencyProperty.Register("Title", typeof(string), typeof(ContentPanel),

new UIPropertyMetadata(""));

public ContentPanel()

{

Initialize();

}

private void Initialize()

{

ResourceDictionary resources = new ResourceDictionary();

resources.Source = new Uri("/MyAssemblyName;component/ContentPanel.xaml",

UriKind.RelativeOrAbsolute);

this.Resources = resources;

}

public string Title

{

get { return (string)GetValue(TitleProperty); }

set { SetValue(TitleProperty, value); }

}

}

Don't forget to vote on Microsoft Connect to get Microsoft to fix the XAML parser!